I don't know it should be called a real lesson since I am recently exposed to TensorFlow. Since I guess my later work involves visualization of massive data, so I prefer things can be done on Windows (Just my preference to the old Windows API). So the first time I read the Mandelbrot example in the book "Get started with TensorFlow", I wondered could I visualize the process in Windows. So I did a slight modification of the example, as follows:

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

Y, X = np.mgrid[-1.3:1.3:0.005, -2:1:0.005]

Z = X + 1j*Y

c = tf.constant(Z.astype(np.complex64))

zs = tf.Variable(c)

ns = tf.Variable(tf.zeros_like(c, tf.float32))

zs_square = tf.pow(zs, 2)

zs_final = tf.add(zs_square, c)

not_diverged = tf.complex_abs(zs_final) < 4

update = tf.group(zs.assign(zs_final), ns.assign_add(tf.cast(not_diverged, tf.float32)), name = "update")

output = tf.identity(ns, name="output")

saver = tf.train.Saver()

sess = tf.Session()

sess.run(tf.initialize_all_variables())

#tf.assign(zs, sess.run(zs))

#tf.assign(ns, sess.run(ns))

tf.train.write_graph(sess.graph_def, "models/", "graph.pb", as_text = True)

saver.save(sess, "models/model.ckpt")

for i in range(200):

sess.run(update)

plt.imshow(sess.run(ns))

plt.show()

sess.close()

And it got the wonderful Mandelbrot fractal:

In order to appreciate the whole process generating the Mandelbrot fractal, I wonder possible I freeze the model, load it under Windows. For each step, I run the "update" node, then fetch the result via "output" node.

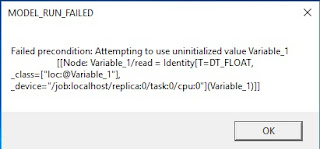

However, it's not applicable since when freezing, the variables will be substituted with constants, and it cannot be later updated again. See the following complain:

I think it's quite understandable, because the intention of TensorFlow is to let the trained model run as quickly as possible, so no surprise that variables are eliminated finally.

If we uncomment the following lines and save the graph as binary:

tf.assign(zs, sess.run(zs))

tf.assign(ns, sess.run(ns))

tf.train.write_graph(sess.graph_def, "models/", "graph.pb", as_text = False)

The fact is it seems the variable didn't get initialized if we explicitly do it in the program:

I am still working on it, but log it here in reminding someone who's applying TensorFlow to the scenario that requires no explicit input, please rethink about it.

import tensorflow as tf

import matplotlib.pyplot as plt

Y, X = np.mgrid[-1.3:1.3:0.005, -2:1:0.005]

Z = X + 1j*Y

c = tf.constant(Z.astype(np.complex64))

zs = tf.Variable(c)

ns = tf.Variable(tf.zeros_like(c, tf.float32))

zs_square = tf.pow(zs, 2)

zs_final = tf.add(zs_square, c)

not_diverged = tf.complex_abs(zs_final) < 4

update = tf.group(zs.assign(zs_final), ns.assign_add(tf.cast(not_diverged, tf.float32)), name = "update")

output = tf.identity(ns, name="output")

saver = tf.train.Saver()

sess = tf.Session()

sess.run(tf.initialize_all_variables())

#tf.assign(zs, sess.run(zs))

#tf.assign(ns, sess.run(ns))

tf.train.write_graph(sess.graph_def, "models/", "graph.pb", as_text = True)

saver.save(sess, "models/model.ckpt")

for i in range(200):

sess.run(update)

plt.imshow(sess.run(ns))

plt.show()

sess.close()

And it got the wonderful Mandelbrot fractal:

In order to appreciate the whole process generating the Mandelbrot fractal, I wonder possible I freeze the model, load it under Windows. For each step, I run the "update" node, then fetch the result via "output" node.

However, it's not applicable since when freezing, the variables will be substituted with constants, and it cannot be later updated again. See the following complain:

I think it's quite understandable, because the intention of TensorFlow is to let the trained model run as quickly as possible, so no surprise that variables are eliminated finally.

If we uncomment the following lines and save the graph as binary:

tf.assign(zs, sess.run(zs))

tf.assign(ns, sess.run(ns))

I am still working on it, but log it here in reminding someone who's applying TensorFlow to the scenario that requires no explicit input, please rethink about it.